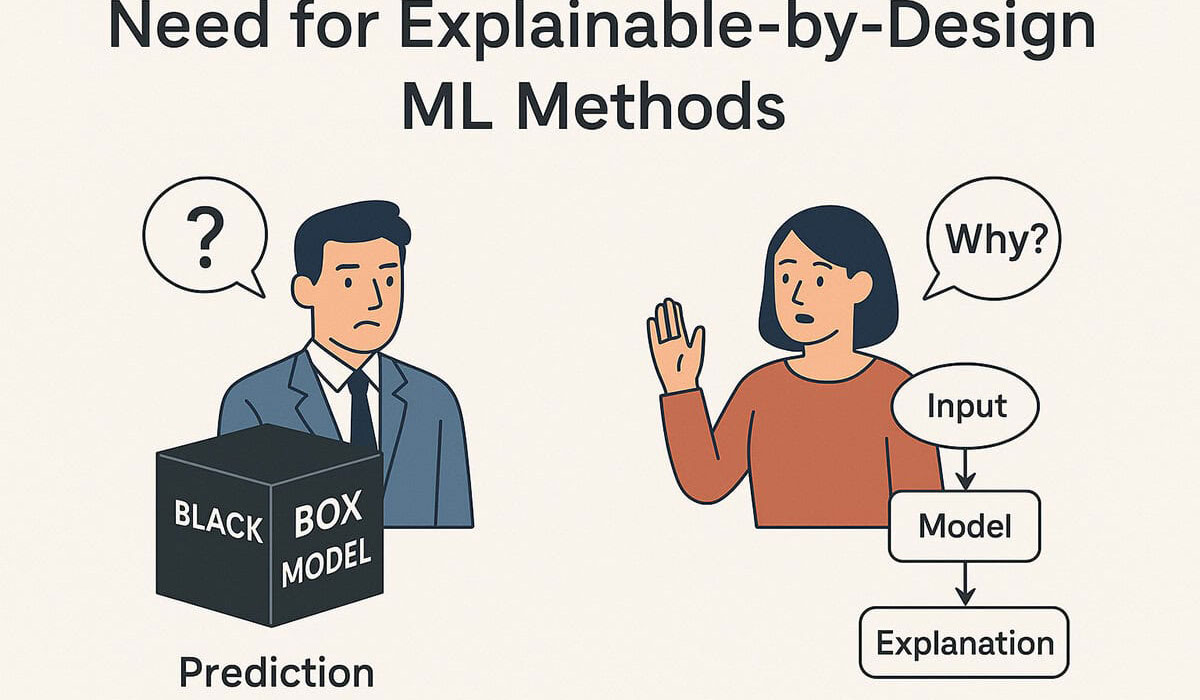

Researchers at the University of Michigan have created a new AI system that helps explain how artificial intelligence makes decisions. Known as Constrained Concept Refinement (CCR), this system makes it easier to tell when AI is giving accurate information and when it might be making mistakes. CCR is not only more accurate and easier to understand than other AI models, but it also works up to ten times faster. Read the full article to find out how this new system could help make AI more reliable and easier to trust.